I first learned about the 512KB Club two months ago, and I've been mildly obsessed with optimizing my website down to fit ever since.

The concept is beautifully simple: a collection of websites that load in under 512KB total, as measured by a Cloudflare scan. As someone who grew up with dial-up and a proclivity for obscure challenges, I was immediately hooked.

My setup: Next.js 14 with App Router, Tailwind CSS v4, hosted on Vercel. Starting weight: ~600KB.

This seems totally doable.

The obvious optimization: the hero photo of my dog and me

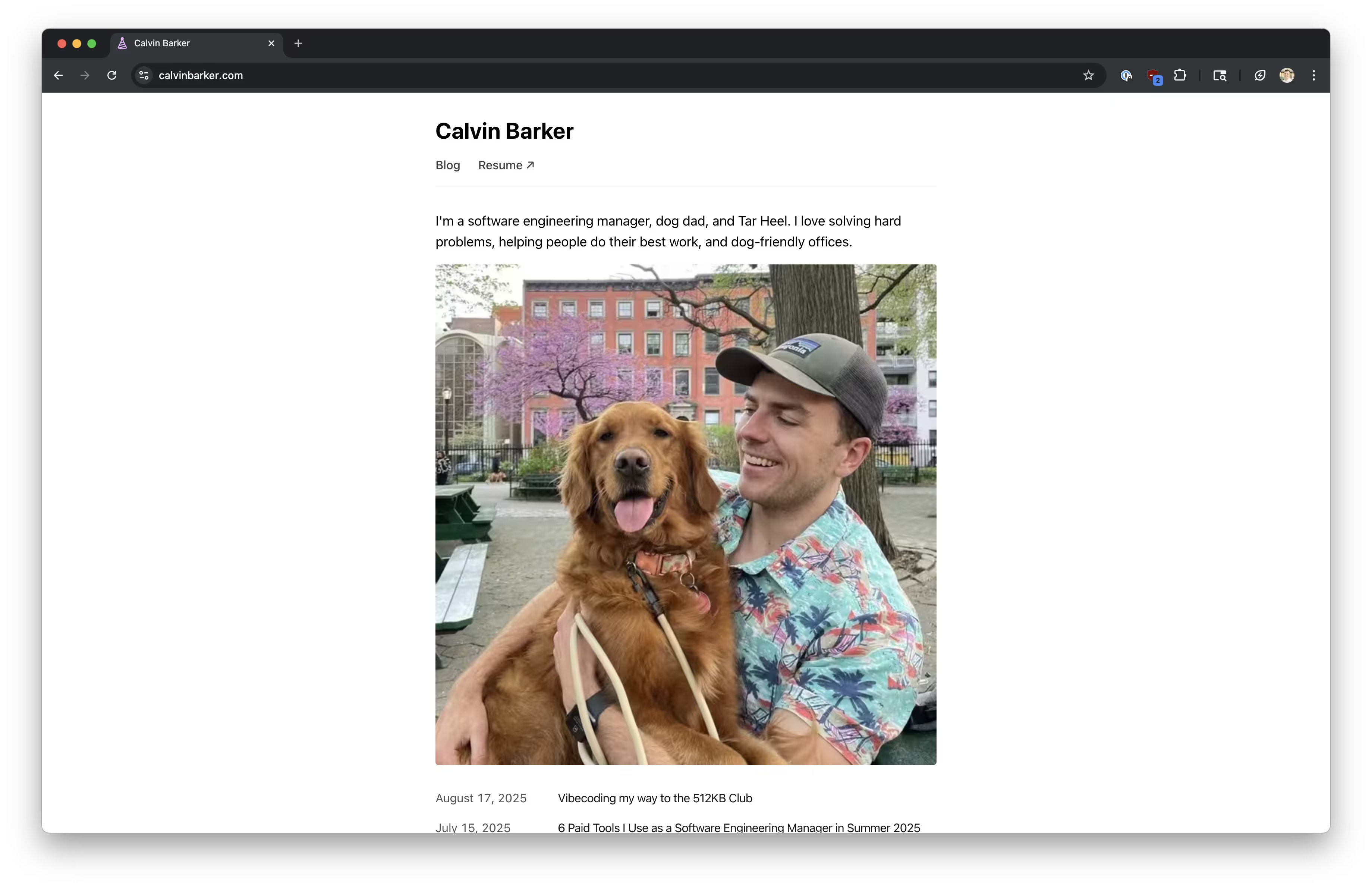

https://calvinbarker.com featuring me with my dog, Waffle.

https://calvinbarker.com featuring me with my dog, Waffle.

At 145KB, removing calvin-waffle.jpg is an obvious target, but my dog is my life, so that's simply not an option.

Exporting the image as a new JPEG with lower quality makes me sad, so I seek wisdom from ChatGPT.

Iteration 1: JPEG to WebP

Google made WebP to provide the same image quality as JPEG, but with a smaller file size, generally on the order of 20-50% smaller.

My first order of business is to convert the image to WebP.

# Using ImageMagick to convert the image.

brew install imagemagick

magick public/calvin-waffle.jpg public/calvin-waffle.webp

This brings the image from 209KB to 197KB. Basically nothing.

Iteration 2: WebP to AVIF

I grovel back to the Oracle of ChatGPT, saying that we need to make it smaller, to which it cheerfully replies, "You're absolutely right! We can certainly make it smaller!" and suggests AVIF.

Now, I actually don't know what this stands for, and as an engineer with frontend phobia, I don't think I've ever noticed this suffix. From Wikipedia:

AV1 Image File Format (AVIF) is an open, royalty-free image file format specification for storing images or image sequences compressed with AV1 in the HEIF container format. It competes with HEIC, which uses the same container format built upon ISOBMFF, but HEVC for compression.

Good enough for me, and ImageMagick can convert WebP to AVIF in a one-liner.

magick public/calvin-waffle.webp public/calvin-waffle.avif

We're now at 71KB. I'd say we're in the money.

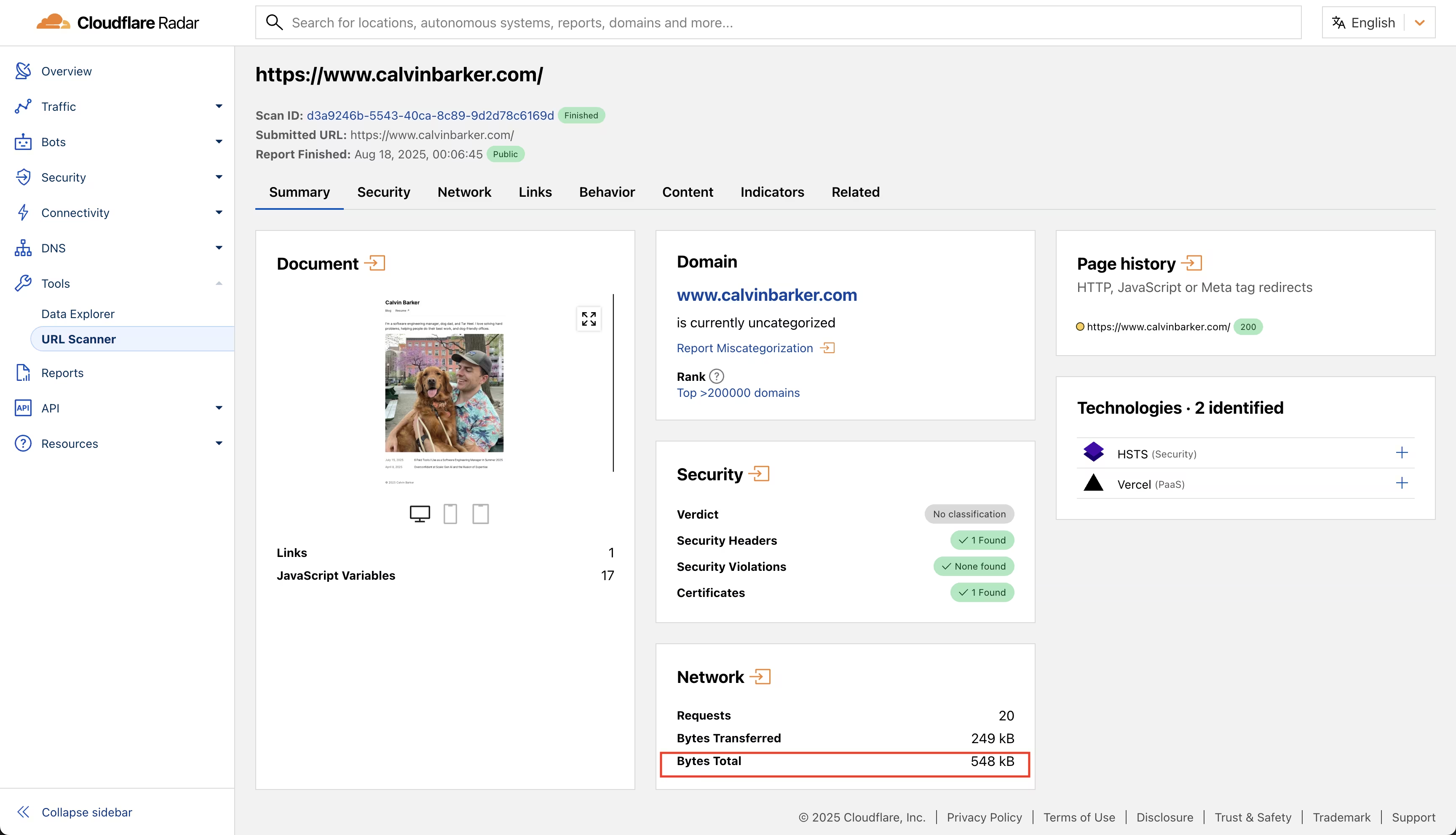

Closer. (source: Cloudflare)

Closer. (source: Cloudflare)

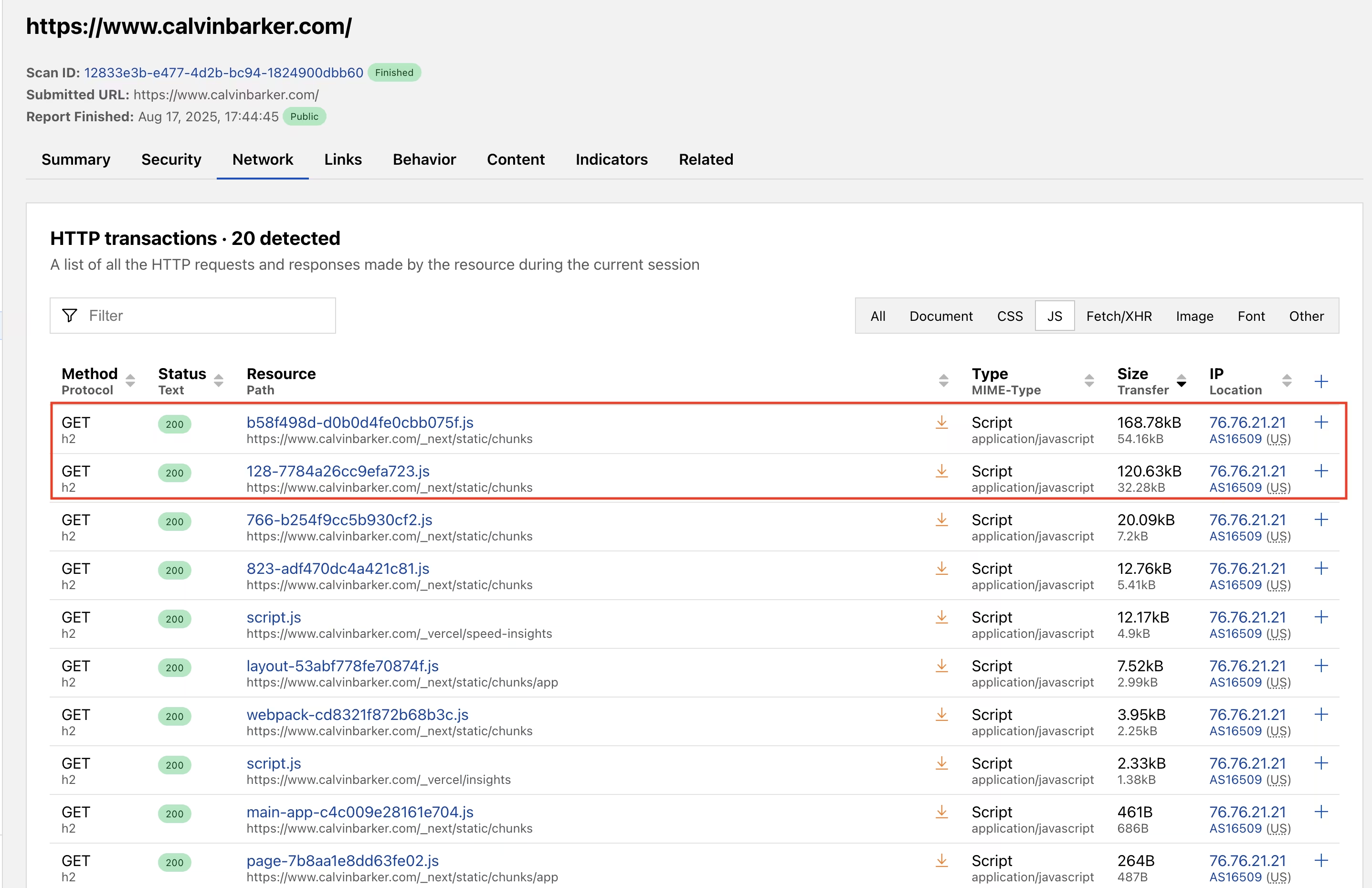

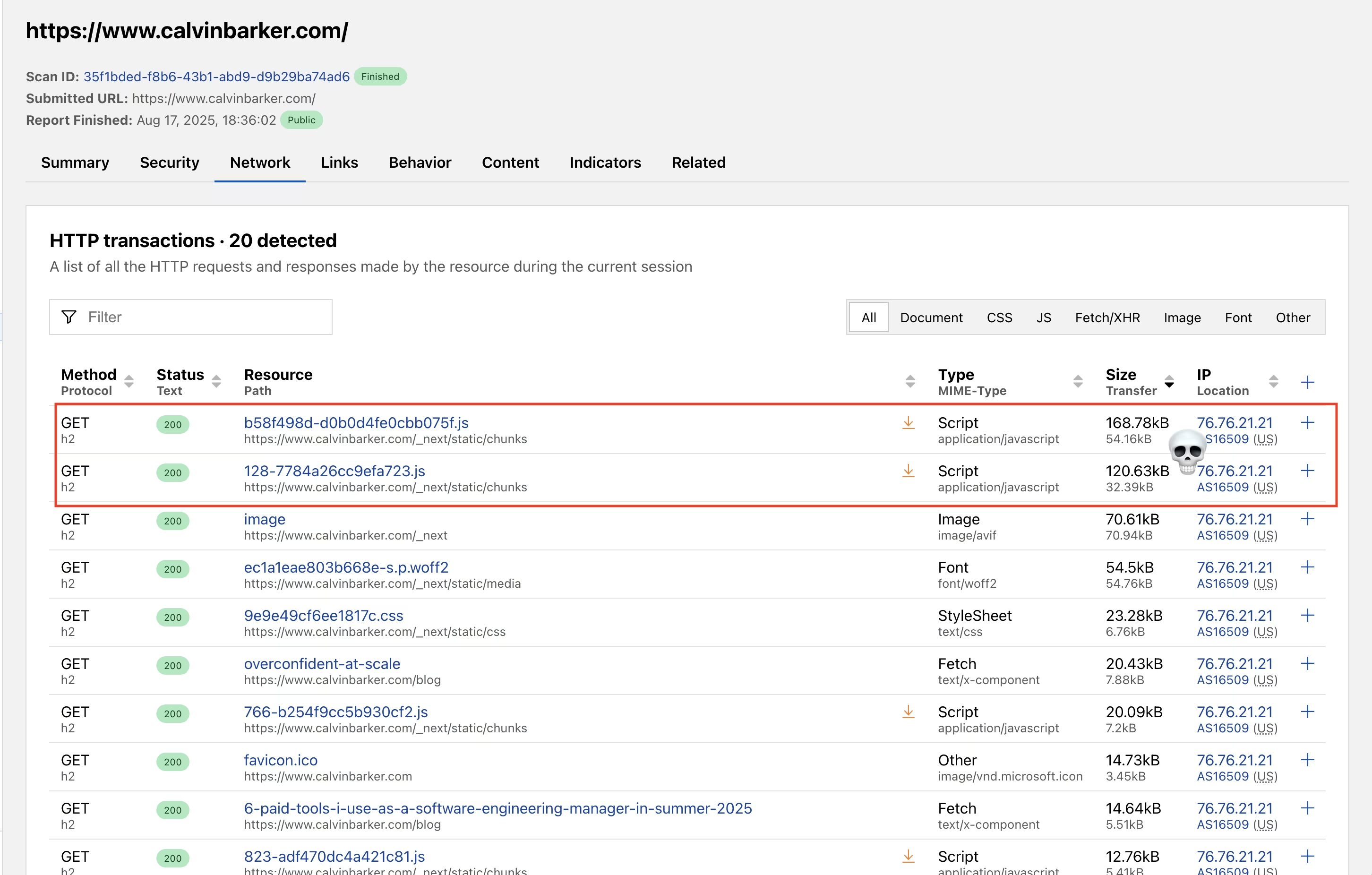

The less-obvious optimization: weird JavaScript files getting loaded

I run yet another Cloudflare scan to hopefully see my progress, but the needle hasn't moved due to these two weird JavaScript files taking up 289KB.

Hmm... (source: Cloudflare)

Hmm... (source: Cloudflare)

I have no idea what these could possibly be and throw the problem at Cursor with this prompt:

I ran a Cloudflare radar scan on my personal site, and it said this was the size:

Network Requests 20

Bytes Transferred 268 kB

Bytes Total 573 kB

These bytes total seem too high, so I looked at Network tab to see the biggest offenders. There are two scripts as the top offenders. What

are these scripts, and how can we optimize them?

It hums along, and we end up with...

source: Cloudflare

source: Cloudflare

...no change, although the total bytes went from 573KB to 567KB. Back to chatting with Cursor.

All right, in the latest build, there wasn't any change in the loaded JavaScript files, although the total bytes went from 573 kilobytes

to 567. I tried redeploying from Vercel (discarding the build cache), but still no change. Is there something we're missing in the build steps for Vercel?

Here are the full logs from the Vercel build:

[14:33:38.796] Running build in Washington, D.C., USA (East) - iad1

[14:33:38.797] Build machine configuration: 2 cores, 8 GB

...

While Cursor churns, I notice something interesting toward the end of the build logs:

...

[14:34:26.894] + First Load JS shared by all 87.1 kB

[14:34:26.894] ├ chunks/128-7784a26cc9efa723.js 31.6 kB

[14:34:26.894] ├ chunks/b58f498d-d0b0d4fe0cbb075f.js 53.6 kB

[14:34:26.894] └ other shared chunks (total) 1.92 kB

...

Ah, heck. The Vercel artifacts look correct, so Cloudflare is still reading from a cache somewhere.

My weekend project to trim 100KB off my personal site may have turned into one of the two hard problems in computer science: cache invalidation.

All right. Well, this seems like we can force some sort of code change to invalidate the cache, right?

Absolutely! Great thinking! We can force cache invalidation by making a small code change that will trigger new file hashes and a fresh deployment. Let's make a tiny, harmless change that will force Next.js to regenerate all the bundles.

Cursor (2025-08-17)

Thanks, Cursor. Let's see the change.

...

const cx = (...classes) => classes.filter(Boolean).join(' ')

// Force cache invalidation - optimized bundles deployment

export default function RootLayout({

...

In terms of live commentary, I don't love that I don't understand what's happening anymore, but I'm suspicious that an added comment would be enough to trigger a new hash calculation.

Hmm! 🤔 The chunk hashes are exactly the same as before:

- chunks/128-7784a26cc9efa723.js

- chunks/b58f498d-d0b0d4fe0cbb075f.js

Looks like I still have a job for at least another month, but the problem still isn't solved.

Thought process 1: just wait for the cache to expire?

Do I have the mental fortitude to sit and wait for the cache to expire?

My brain: "No, Calvin. That's crazy talk. You must solve this now."

Thought process 2: Compressed vs Uncompressed Sizes

Ah, wait.

This is not a cache invalidation problem. The Next.js build logs show compressed file sizes, but Cloudflare reports uncompressed sizes. I completely missed this fundamental difference and spent an hour debugging a non-existent caching issue.

Interlude

I know that I don't need Next.js to run a website. I can totally create this website with plain, vanilla, boring HTML—but I don't want to write HTML. I just want to write Markdown files and have them work.

Back to business: resizing the hero image

As it stands, I need to trim at least 60KB of uncompressed data more off of my site. The photo only renders at 672x672 based on the maximum container width, so I suppose a little image resizing can't hurt.

$ magick public/calvin-waffle.avif -resize 672x672 public/calvin-waffle.avif

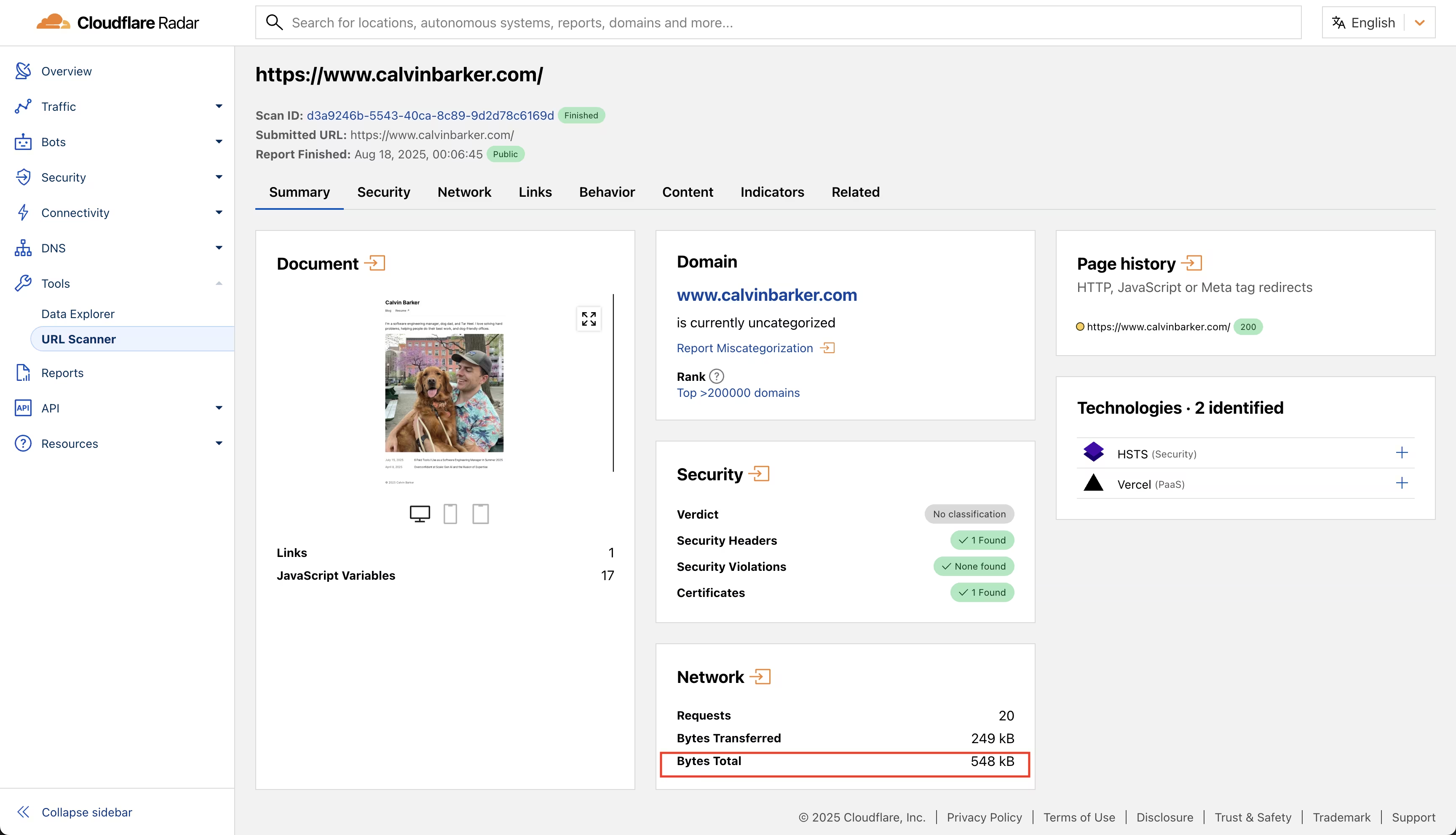

Closer. (source: Cloudflare)

Closer. (source: Cloudflare)

This trims off 19KB from 71KB to 52KB and brings the total bundle size down to 548KB. I just need 36KB more.

Checking out the HAR

I give ChatGPT the Cloudflare-generated HAR file, and it points out that there's a 54.5KB custom font file. I don't need that bloat at all, so let's cut that out with a new Cursor chat:

Minimal changes to get under 512 KB (pick 1-2)

1. Drop the custom font and use the system stack

Savings: ~56 KB. Zero UX risk on a personal site.

• Remove the font import/preload and any @font-face for that file.

• In Tailwind or CSS, set:

:root { --font-sans: -apple-system, BlinkMacSystemFont, "Segoe UI", Roboto, "Helvetica Neue", Arial, "Noto Sans", "Apple Color Emoji", "Segoe UI Emoji", sans-serif; }

html { font-family: var(--font-sans); }

BINGO. The custom font will do it.

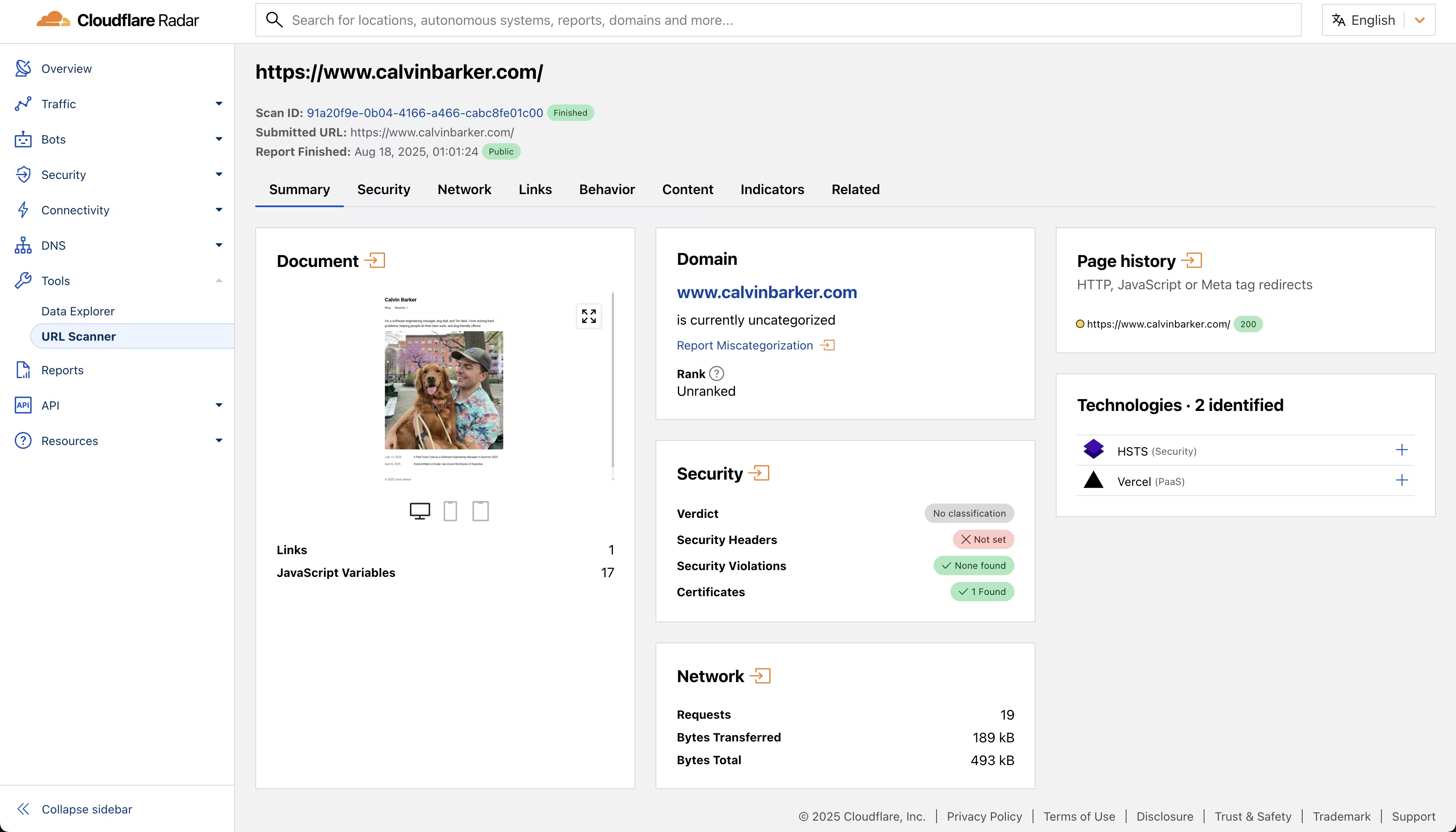

Mission accomplished. (source: Cloudflare)

Mission accomplished. (source: Cloudflare)

We're now at 493KB. Perhaps there's more to cut, but it's late, and I want to go to bed.

Key learnings and reminders

- Understand the measurement - Next.js logs compressed sizes, Cloudflare reports uncompressed. This difference cost me an hour of debugging.

- Read the logs - The Vercel logs hinted at that answer all along; I just missed the compression detail.

- AVIF for images - From 209KB JPEG to 71KB AVIF (66% reduction).

- Image dimensions matter - Resizing to actual display size (672px) saved another 19KB.

- Custom fonts are expensive - 54KB for Geist Sans vs 0KB for system fonts on a personal site.

- LLMs excel at performance auditing - ChatGPT immediately identified the font bottleneck from the HAR file.

What's next?

This was a fun weekend exercise, and I learned a bit more about AVIF, bundle analysis tools, and ChatGPT's capacity to spot performance bottlenecks. The best part is that the biggest savings came from simply removing a custom font.

Other options involve revisiting the javascript files and trimming down the favicon, but Waffle's picture stays, obviously.